When you purchase through links on our site, we may earn an affiliate commission. Learn more...

Are GPUs Faster Than CPUs? | In-Depth Guide for Beginners

Have you ever wondered why dedicated GPUs outperform CPUs in terms of power? The fact that they have been preferred over CPUs for mining is no coincidence.

I think you remember what happened back in 2020 with the chips crisis. The dark times when an average graphics card would cost more than $1000.

We can all agree that it can be quite complicated and challenging to understand the science behind this with all the new technology that is being developed and everyone giving scientific responses.

Key Takeaways

- GPUs have more cores than CPUs and are optimized for throughput and parallel processing.

- CPUs have lower latency and are designed for multitasking, handling diverse and random instructions.

- GPUs are primarily used for rendering graphics, while CPUs handle general tasks such as spreadsheets and video editing.

- The number of cores a CPU can effectively use is limited, and additional cores can lead to poor performance.

The Short Version

Think of GPUs as the heavy lifters with tons of cores, perfect for handling lots of tasks at once. CPUs? They focus on one thing at a time but are faster at switching between tasks because of their low latency.

On the surface, GPUs and CPUs seem pretty similar. They’re both silicon chips hanging out on a PCB with heat sinks keeping them cool. But when you really dig into how they’re built, the differences are huge—and those differences make them good at totally different jobs in your PC.

Basically, CPUs are the multitaskers, and GPUs are the workhorses. Both are important, depending on what you’re trying to do—whether it’s organizing your life on spreadsheets or cranking up the graphics in your favorite game.

CPUs have lower latency compared to GPUs

Modern computers are all-purpose devices that can perform simple tasks like spreadsheets, Discord calls, listening to music, audio/video editing, and the list goes on. The majority of these have to be processed by the CPU.

As a result, your CPU has to be able to process many diverse and random instructions to multitask. That is made possible because CPUs, as opposed to graphics cards, are designed to offer low latency.

GPUs are Optimized for Throughput and parallel processing

Similar to how your computer’s primary CPU excels at doing a variety of tasks rapidly, GPUs are only doing one thing and that’s rendering graphics. This entails doing a huge number of simultaneous calculations that are extremely similar at the same time.

Because of this, GPUs prioritize raw throughput over CPUs, which are intended to be considerably more flexible and balanced.

When it comes to performing these comparable mathematical equations that decide how your character achieved a double kill, how Kratos hurled his axe, or anything else that may appear on your screen, GPUs have a large number of tiny identical compute units that do just that.

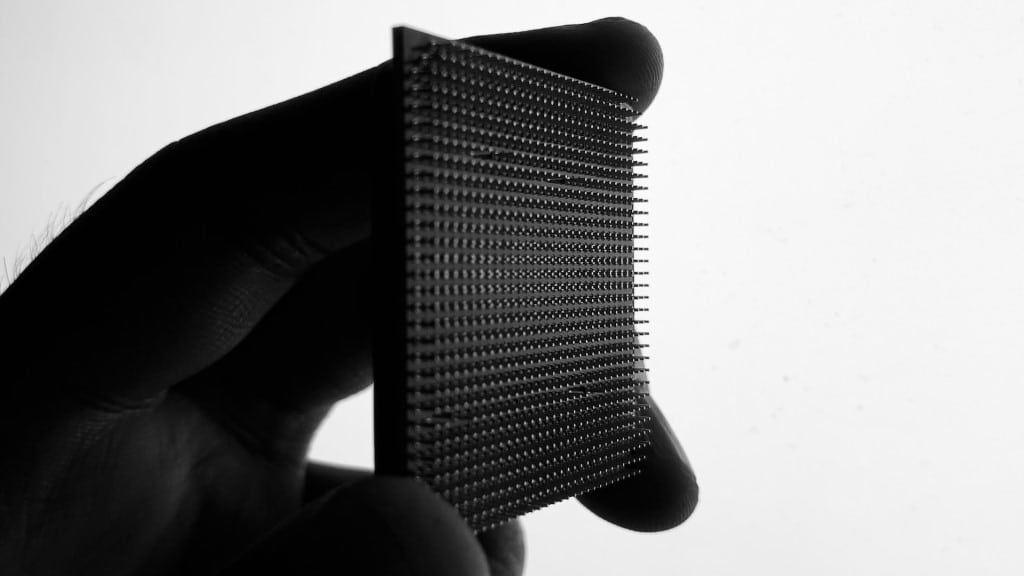

Why can’t CPUs have as many cores as GPUs?

So, it’s true that a CPU core can do anything that a GPU core can.

The reason why CPUs don’t have as many cores as GPUs is that you won’t be able to effectively utilize them. Furthermore, there’s a chance that the cores you employ will perform poorly.

While there are CPUs with absurdly high core counts available (they are not intended for customers like you and me), they are often designed with a specific job in mind and offer great throughput while being extremely efficient.

You can’t effectively utilize all the cores

Each CPU core functions as a separate processor. It’s not precisely simple to create software that can efficiently utilize a lot of processors at once.

The maximum number of cores for most current AAA games is about 4, while some can make use of 6 and 8-core CPUs.

The number of cores you can employ efficiently mostly relies on the type of work you want to perform. Since games tend to be quite complicated but lack a lot of parallelism, employing more cores than a handful is tricky.

In comparison, 3D graphics rendering is extremely parallel. Numerous polygons may be individually drawn since each scene is made up of several of them. The various pixels that make up the final image are all separate from one another.

As you increase the number of computational resources, the entire process scales incredibly effectively.

Worse performance from the cores you do use

Regarding the possibility that the cores you do use could perform poorly, there is only a finite amount of dead space as well as a certain amount of transistor and power budget.

Keep in mind that additional cores demand more hardware-level management and communication between cores, too. It makes sense that as you increase the number of cores, you’ll be able to devote fewer of those resources per core.

In comparison to a processor with 10 cores, a 100-core CPU will have around 10 times fewer transistors per core. As a result, each core will have significantly less power, which will negatively impact workloads that aren’t extremely parallel.

Other benefits of parallel processing

The use of graphics cards for general-purpose computing, known as GPGPU, has become increasingly popular due to the GPU’s ability to process tasks in parallel.

Bitcoin mining and similar tasks require complex calculations, and GPUs are much more efficient than using a computer’s CPU.

But, as you can see, GPUs are now used for more than just gaming. You generally shouldn’t try to run your operating system on a graphics card because there are still big disparities between your GPU and CPU.

Final Thoughts

So that’s it. There is a lot more to it than I was able to cover here, but hopefully, I got you started on a path to understanding all of this. I tried to simplify everything as much as possible.

There is no denying the power of the GPU, especially at the rate that it’s advancing with recent models having more and more CUDA cores. However, that comes at the cost of heat generation and power consumption.